tech

December 3, 2025

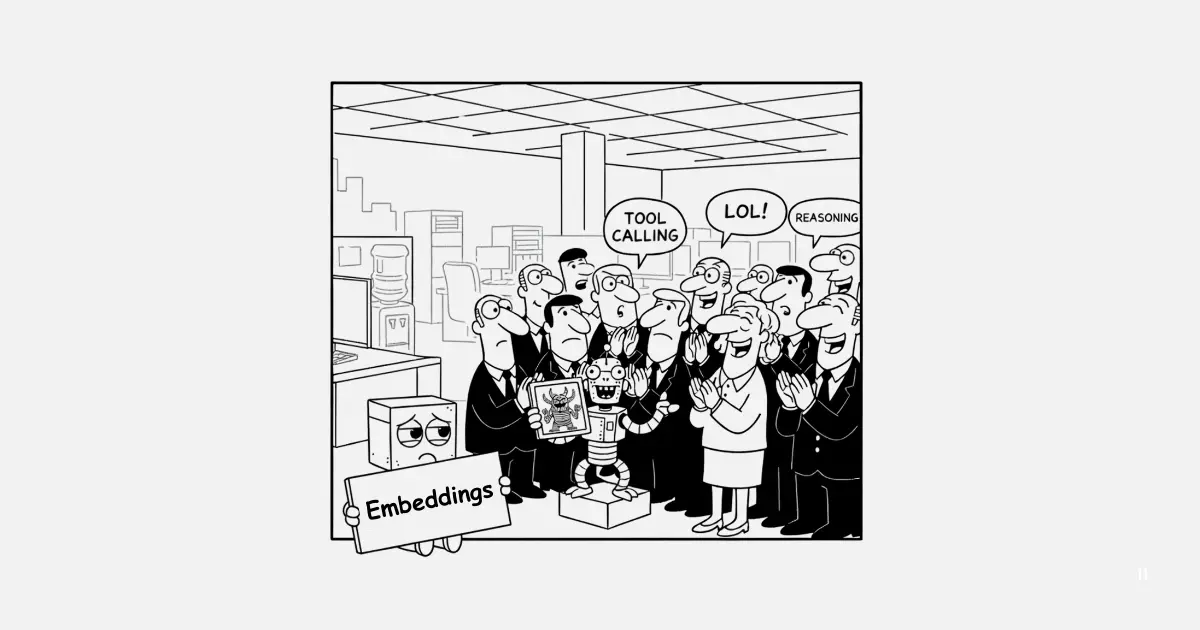

Embeddings Are AI’s Red-Headed Stepchild

Embedding models are the red-headed stepchild of AI. Not as sexy as image generation, nor as headline-grabbing as LLM chatbots, nor as apocalyptic as predictions of artificial superintelligence, semantic embeddings are esoteric and technical, and regular consumers haven’t got much direct use for them.

TL;DR

- Embedding models are foundational to AI but less recognized than image generators or chatbots.

- They represent semantics as vectors in high-dimensional spaces, a concept improved by neural networks and transformers.

- Generative models and embedding models share similar transformer architectures but differ in training and use (unidirectional vs. bidirectional attention).

- Historically, generative models were thought to be less suitable for embeddings due to the curse of dimensionality, but this is changing with smaller, high-performance models.

- Adapting generative models for embeddings offers economic benefits by utilizing existing pre-training resources and allows for knowledge transfer, as seen with multimodal embeddings.

- Despite not having flashy demonstrations, embedding models perform critical real-world tasks like information retrieval, classification, and fraud detection.

Continue reading

the original article